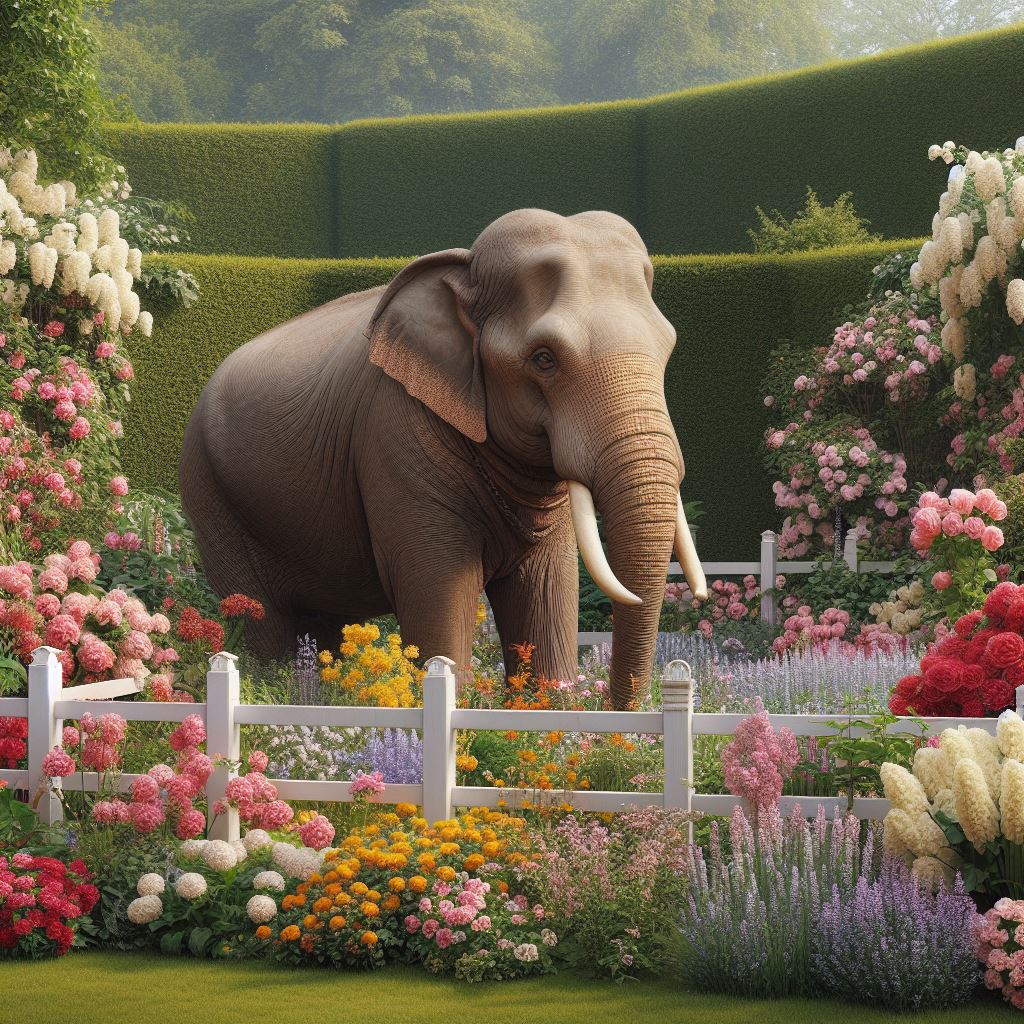

Elephant in the Garden

Continuing from the previous blog post, which was written entirely by artificial intelligence (except for the introductory line). I’ve been thinking about the opportunities and dangers of AI.

The big lesson? VIGILANCE.

AI isn’t a fad. It isn’t going to go away. It is going to become increasingly important. The genie cannot be put back in the lamp, the toothpaste in the tube, nor Pandora’s box slammed shut again. And, since open source models are already out in the wild, any attempt to regulate its use is doomed to failure.

But let’s be clear about what it is. It’s a set of rules connected to a database of material. That remains true however complex the engine becomes; however much it generates new rules of its own. It is still a parrot, stringing together phrases or visual elements that appear convincing because humans try to see meaning in what they see and hear.

There is no meaning, no intent in AI’s results except what we impose on them. If scientific problems have been solved, that’s wonderful – but they are solved by human interpretation of AI suggestions. The fact that humans might never have made the suggestions does not mean they are the result of a superior intelligence.

In terms of so-called “generative AI” – creating new works in whatever form – creative workers clearly have something to worry about. Obviously, as a writer, I’m astonished and outraged at the spectacular theft of written and graphic work perpetrated in “teaching” the models behind the various AI systems. It’s plunder on a scale never seen before. (One of the founders of OpenAI has said, "But we couldnt have developed it without access to copyright material." Well, exactly...)

Machine-generated work will not replace art, nor the top end of the creative industries. (Although by imposing a meaning on something, we might convince ourselves otherwise. And you could say about any art that the viewer or reader imposes their own meaning, but that’s a whole different discussion.)

But the people who produce more (shall we say) everyday content, written or visual, are likely to find themselves under threat from AI systems. The human work was “good enough”, and so will be the machine-generated competition. But cheaper.

Still, as with the introduction of any new technologies, unexpected opportunities will arise. People will adjust. Even though the adjustment period will be painful.

The larger threat, we hear, is that AI will become sentient and take over the world – the “Terminator” scenario. Well, I don’t believe that the world will shortly be taken over by robots.

No, for me the much bigger danger lies in trusting too much, and questioning too little.

Ever since the early days of computing, people have believed almost anything because it looked good on the screen, often resulting in a “computer says no” mentality – a blind and unquestioning trust in what is displayed in the screen. Now, more than ever, you have to ask why the computer says no, and especially if what you see comes from a machine learning system (artificial intelligence, as we call it). And let's not forget the Post Office scandal, where the computer said hundreds of postmasters were committing fraud and the Post Office bosses refused to consider that the computer system might be at fault.

Look at my previous post, written entirely by AI. It sounds convincing. It’s not badly written in the general scheme of things. But it is entirely wrong.

In this instance (Shakespeare using AI) most people would realise that something wasn’t right. But what if you didn’t know? What if you were looking to the article to give you the facts? AI does this all the time. You could say that it is what AI does. It strings together phrases from its database. It’s up to you to decide whether the results are reasonable.

If AI says there’s an elephant in my garden. That sounds odd, but not unreasonable. But there is no elephant. But, oh, here’s a picture of it. (below)

Now, who do you believe? Me, or the AI picture?

It’s frightening.

So what’s the real lesson? The real lesson is that you shouldn’t trust anything that you read or see. Nothing at all. Bleak, isn’t it?